Abstract

Vision-Language-Action (VLA) models have shown remarkable potential in visuomotor control and instruction comprehension through end-to-end learning processes. However, current VLA models face significant challenges: they are slow during inference and require extensive pre-training on large amounts of robotic data, making real-world deployment difficult. In this paper, we introduce a new family of compact vision-language-action models, called TinyVLA, which offers two key advantages over existing VLA models: (1) faster inference speeds, and (2) improved data efficiency, eliminating the need for pre-training stage. Our framework incorporates two essential components to build TinyVLA: (1) initializing the policy backbone with robust, high-speed multimodal models, and (2) integrating a diffusion policy decoder during fine-tuning to enable precise robot actions. We conducted extensive evaluations of TinyVLA in both simulation and on real robots, demonstrating that our approach significantly outperforms the state-of-the-art VLA model, OpenVLA, in terms of speed and data efficiency, while delivering comparable or superior performance. Additionally, TinyVLA exhibits strong generalization capabilities across various dimensions, including language instructions, novel objects, unseen positions, changes in object appearance, background variations, and environmental shifts, often matching or exceeding the performance of OpenVLA. We believe that \methodname offers an interesting perspective on utilizing pre-trained multimodal models for policy learning.

Generalization Experiments of our TinyVLA

1. Instruction generalization

Instruction: Upright the tipped-over green mug.

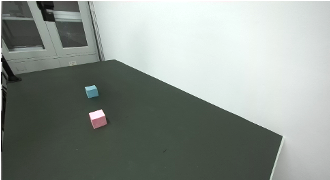

Instruction: Pick the pink cube.

Notice: Both objects are seen in different tasks.

Our policy did not overfit to the seen trajectories but instead picked up the cube and then released it.

Instruction: Pick the car and place into the box.

Notice: The toy car is totally unseen object.

Our policy can recognize the unseen toy car and place it into the box with human help.

2. Object&Appearance generalization

3. Background generalization

4. Distractors in bimanual settings

5. View Generalization

BibTeX

@article{wen2024tinyvla,

title={TinyVLA: Towards Fast, Data-Efficient Vision-Language-Action Models for Robotic Manipulation},

author={Wen, Junjie and Zhu, Yichen and Li, Jinming and Zhu, Minjie and Wu, Kun and Xu, Zhiyuan and Cheng, Ran and Shen, Chaomin and Peng, Yaxin and Feng, Feifei and others},

journal={arXiv preprint arXiv:2409.12514},

year={2024}

}